The ongoing 2020 World Artificial Intelligence Conference in Shanghai is widely regarded as a confidence-boosting appearance - artificial intelligence is not only a helper in epidemic prevention and control but also a "trump card" for economic recovery.

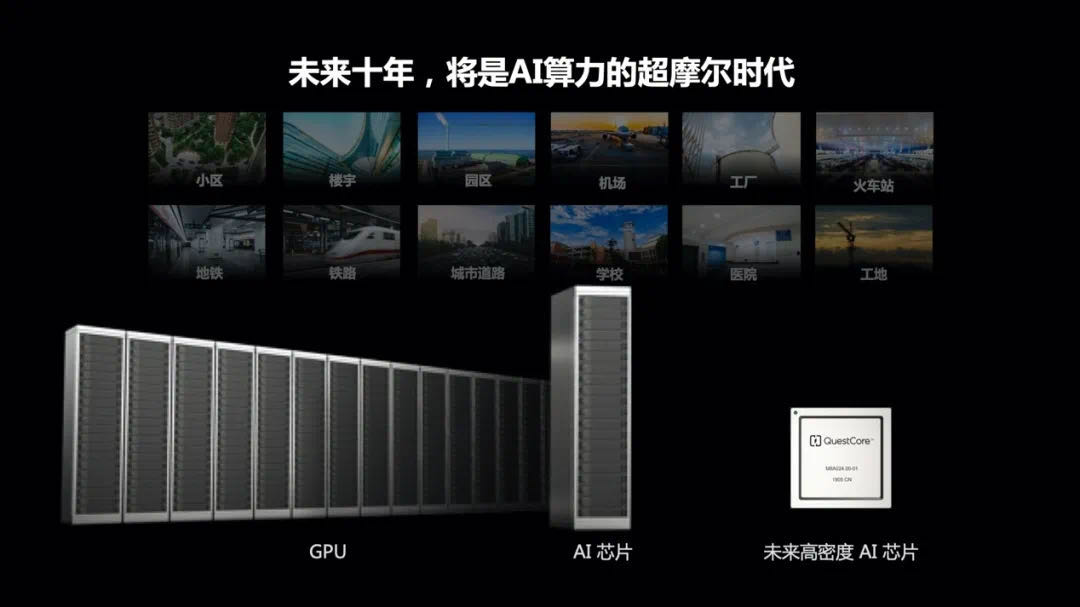

What will the AI, which is expected, go in the future? Zhu Long, co-founder and CEO of YITU Technology, a leading enterprise in artificial intelligence in China, made a prediction in his speech: "The next ten years will be the super Moore era of AI computing power." "Infrastructure change will promote the leap of human society into the machine civilization."

Here is the complete content of Zhu Long's speech, organized without changing the original meaning, to share with everyone:

I have been engaged in artificial intelligence research for nearly 20 years, experiencing different stages of AI development, including the early ups and downs to becoming popular, and now discussing where AI will go in different industries.

Today, when we talk about artificial intelligence, let's first look at what era we are in. The development of human civilization has gradually evolved from the earliest primitive civilization to agricultural civilization, to industrial civilization, and to today's Internet era. Artificial intelligence began more than 60 years ago with the Turing test. Looking at the development of human civilization throughout history, what has driven the changes in human civilization? What has happened in this process of development and change?

Infrastructure change promotes the leap of human civilization to machine civilization

For a long time, we may have forgotten that the most common tools in life are the most important basis for social changes.

In the most ancient times, stone tools, bows and arrows, fire, and pottery constituted a simple primitive civilization. Then, in agricultural society, iron tools, writing, printing, and wheels constituted the foundation of agricultural civilization. In modern times, the invention of steam engines, electricity, internal combustion engines, and other tools constituted the foundation of industrial civilization. In the past few decades, the Internet and semiconductor chips have constituted the foundation of information civilization.

Here is a very important point to share with everyone: the development and change of civilization lie in the revolution of infrastructure, that is, the change of production tools.

Looking at the development of human civilization from a historical perspective, where are we today?

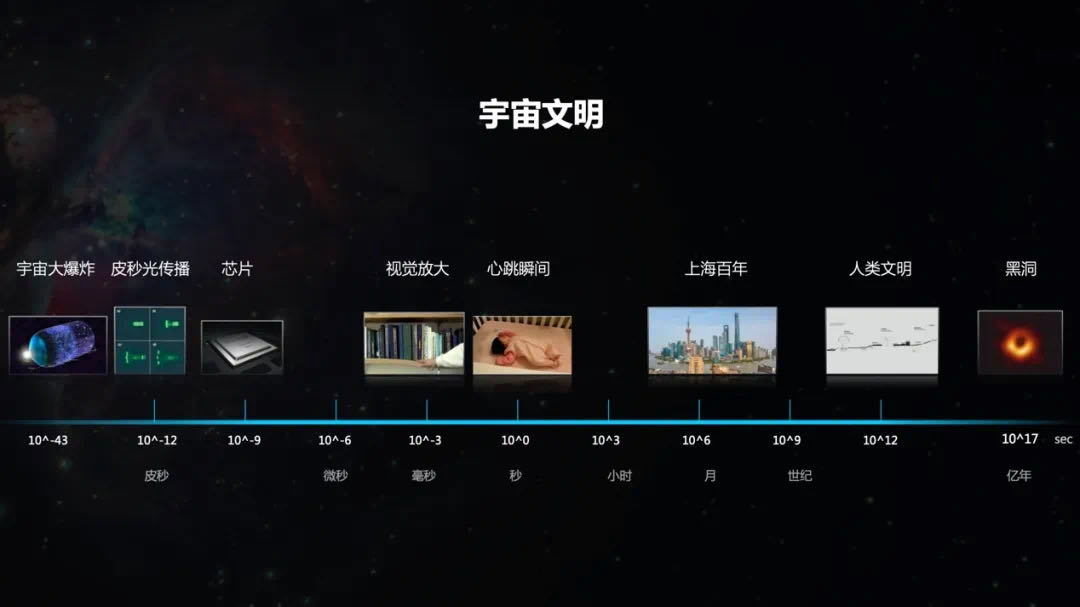

The smallest unit of time we know in the universe is ten to the minus forty-three seconds after the Big Bang. From this smallest unit of time to the instant of a human heartbeat of 1 second, to the history of a city, to the history of mankind, to the more distant history of the galaxy that humans have tried to observe hundreds of millions of years ago, this is the development and change of cosmic civilization from the perspective of the time axis and the physical world.

The following photos are Shanghai 100 years ago, Pudong 30 years ago, and Shanghai today. The birth and development of photography technology have given us a tool that can depict the changes of cities in different periods. This set of photos of Shanghai is a change on a century scale.

The following photos are Shanghai 100 years ago, Pudong 30 years ago, and Shanghai today. The birth and development of photography technology have given us a tool that can depict the changes of cities in different periods. This set of photos of Shanghai is a change on a century scale.

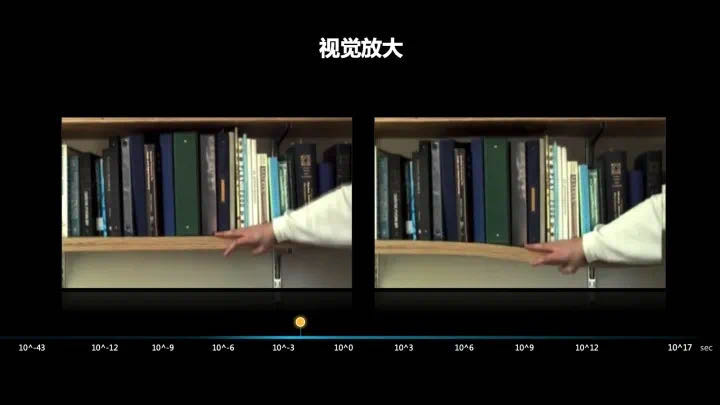

Let's look at the changes in the physical world on a one-second scale. The photo on the left is a normal shot of a hand holding a bookshelf, and the photo on the right is a machine seeing the different frequency signals in the video, capturing the tiny vibrations, magnifying them by 100 times, 1000 times, and you can see how the wood is subjected to force, which is very subtle on the time axis.

Let's look at the changes in the physical world on a one-second scale. The photo on the left is a normal shot of a hand holding a bookshelf, and the photo on the right is a machine seeing the different frequency signals in the video, capturing the tiny vibrations, magnifying them by 100 times, 1000 times, and you can see how the wood is subjected to force, which is very subtle on the time axis.

Let's look at a more macro world. The photo on the left is a galaxy photographed by the Hubble telescope in 2006, and the photo on the right is an attempt to photograph the center of the galaxy's black hole in 2019.

Let's look at a more macro world. The photo on the left is a galaxy photographed by the Hubble telescope in 2006, and the photo on the right is an attempt to photograph the center of the galaxy's black hole in 2019.

There is a theoretical calculation in academia that black holes can be photographed with several radio telescopes around the world. If you want to really take a photo of a black hole with a single telescope, its span and granularity may need to cover a range from several kilometers to tens of kilometers, even thousands of kilometers long telescopes to take a photo. Therefore, humans have used a technology - to piece together a black hole photo from different radio telescopes on the earth, which is a computer-calculated observation value, and the actual situation will be very close to this theoretical simulation value.

Humans have photographed the world five hundred million years ago that humans can observe. In terms of the time axis, this is a very distant past. This also proves that from the very small world to the very distant world, humans can observe. So now, centered on this moment, 30 years ago was the information age, and the next 30 years will be the future AI era. We have seen what has happened in the past 30 years, and we can predict what will happen in the future AI era.

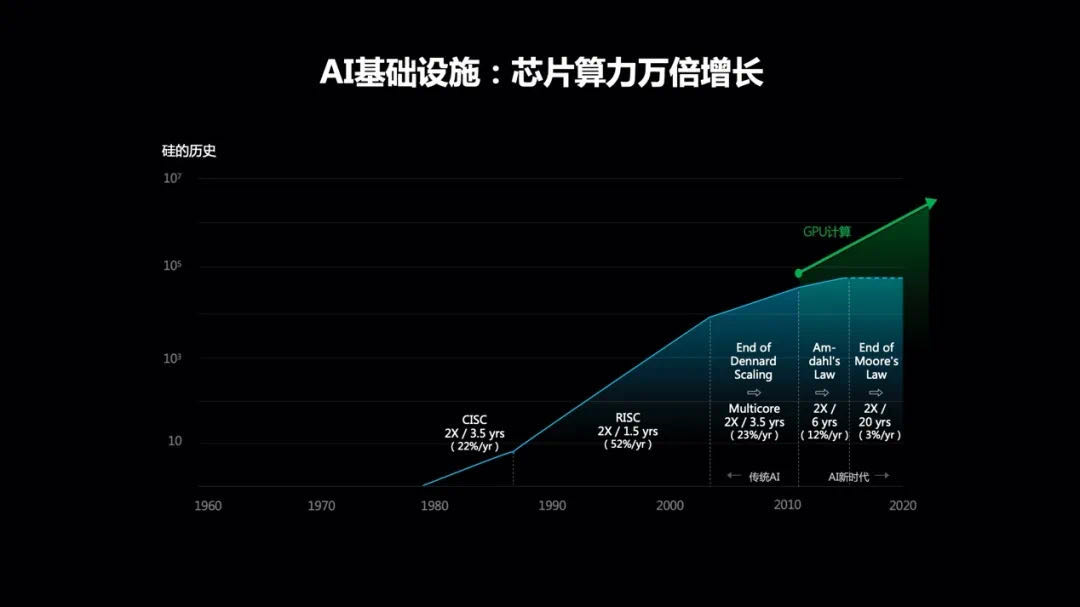

Chip computing power, algorithm level, algorithm model, training computing power have increased by ten thousand times

The three most important elements of the information age are computing power, storage capacity, and transmission speed. The most important change in the past 30 years of the information age is that these three elements - computing power, storage capacity, and transmission speed - have all increased by a million times.

In the past few decades, the development of computing power has been divided into two stages, one is the blue curve, and the other is the green curve. We call them the old or traditional era chips, and the new era chips based on GPUs or customized for AI computing. The blue curve is based on the traditional Moore's Law, with performance doubling every 18-24 months; the green curve is the accelerated super Moore era chip computing power, which has increased by nearly ten thousand times in the past few years, and the computing power of a single GPU is a thousand times more than that based on CPUs.

So how much has the algorithm level improved? Everyone may still remember the 2015 AlphaGo battle with human players. Since 2015, machines can play chess beyond humans, and machine face recognition can surpass humans; by 2020, the performance of machines compared to five years ago has increased by a million times.

In 2015, the machine's recognition ability exceeded that of humans. Taking that day as the baseline, five years later, the machine has improved by a million times compared to itself, surpassing human capabilities by a million times, and the perceptual intelligence has improved by a million times. This is a very astonishing number.

At the same time, the size of the model and the number of algorithm parameters it uses have also increased by ten thousand times. Just like the brain, the neurons have increased by ten thousand times compared to the past; the training efficiency and the energy consumption for learning have also increased by ten thousand times.

So now there is a saying: AI has entered a new era of computing power hegemony, and everyone needs to use computing power a thousand times or ten thousand times to train the best algorithms in the world.

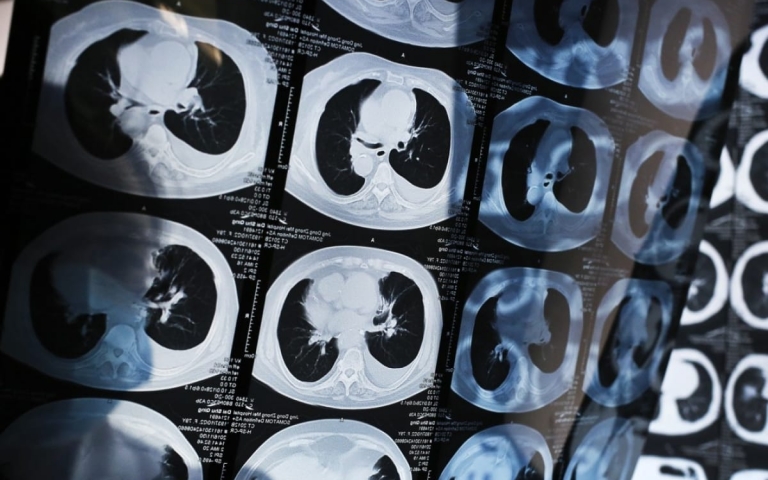

So what can AI do specifically? In the early stage of the COVID-19 pandemic, we collaborated with the Shanghai Public Health Clinical Center to develop a COVID-19 auxiliary diagnostic AI system. The system can provide descriptions of the shape, size, and other lesions based on lung CT scans, as well as qualitative diagnoses, reducing the doctor's judgment from several hours in the past to a few seconds with machine assistance. This is a kind of visual perception intelligence.

In the future, low-level perceptual intelligence will leap to high-level decision-making intelligence; from the most basic visual perception intelligence, to high-level cognitive intelligence and decision-making intelligence supported by a complete knowledge graph, and even predictive intelligence. In the field of medicine, we have already achieved this in pediatric medicine, where AI can learn nearly a million medical synonyms and nearly ten million associations based on millions of cases, reaching a diagnostic level close to that of a doctor with ten years of experience.

A few years ago, intelligent computing needed for urban management required more than a dozen cabinets to provide computing power support, requiring a lot of space, investment, and a lot of energy consumption; a year ago, due to the improvement of AI chip performance, it has been reduced from more than a dozen cabinets to one cabinet.

In the next ten years, what changes will be brought by the supermolar era? For the intelligent computing required for city management, a palm-sized chip can support the computing needs of tens of thousands or even hundreds of thousands of video channels required for city management today.

You can copy the link to share to others: https://www.yitutech.com/node/904

Related articles

-

Yitu unveils new AI cancer detection tool, hailing such products ‘great creations in human history’

-

YITU Technology wins Super AI Leader Award at World Artificial Intelligence Conference

-

Chinese AI Pioneer YITU announces plan to establish R&D Hub in Singapore

-

YITU Technology Participates in Business Forum with Prime Minister Theresa May